Nearly a full month of using AWS' S3 storage to backup roughly 7TB of data and so far it's been a great experience. All of the alerting I put in place worked as expected and I got notifications before and after I exceeded my budget (which was expected for the first month due to the cost of uploading the data). One thing I didn't realize until today was that my expected bill for next month would be about $9 instead of the $6-7 I expected it to be, not a big deal but still something that worried me since I was expecting one price and seeing another and it wasn't adding up to what the AWS billing calculator said.

I did some more digging and got down into the billing breakdown to see where each penny was being used and noticed I was spending about $3 on "GlacierStagingStorage" which was billed at $0.021/GB compared to Glacier's normal $0.00099/GB which again isn't a huge deal unless you have a few hundred GB of files there. This sent me down the rabbit hole of finding out what these "staging" files are and how to get rid of them.

Long story short, when you upload files to AWS S3 it uploads files in parts instead of individual files. If an upload doesn't complete for one reason or another the parts that were already uploaded just hang out in this staging storage waiting for the rest of the upload which will never finish. Apparently there are ways to find and clean up these incomplete parts manually using the CLI, but some people complain it's tedious and the only method I found involves S3 Storage Lens which takes 48+ hours to update after you configure it, and it doesn't guarantee future incomplete uploads will get cleaned up either.

So I went searching and found a post of somebody claiming to do this using Lifecycle Management rules, but finding a guide was a bit hard (possibly because it was so simple to do). I actually worked with one of my co-workers to figure it out just to make sure I didn't create a rule that would accidentally delete terabytes of data on accident. So here's a quick and easy guide to creating a Lifecycle rule to clean up those pesky files!

Creating the Lifecycle Management Rule

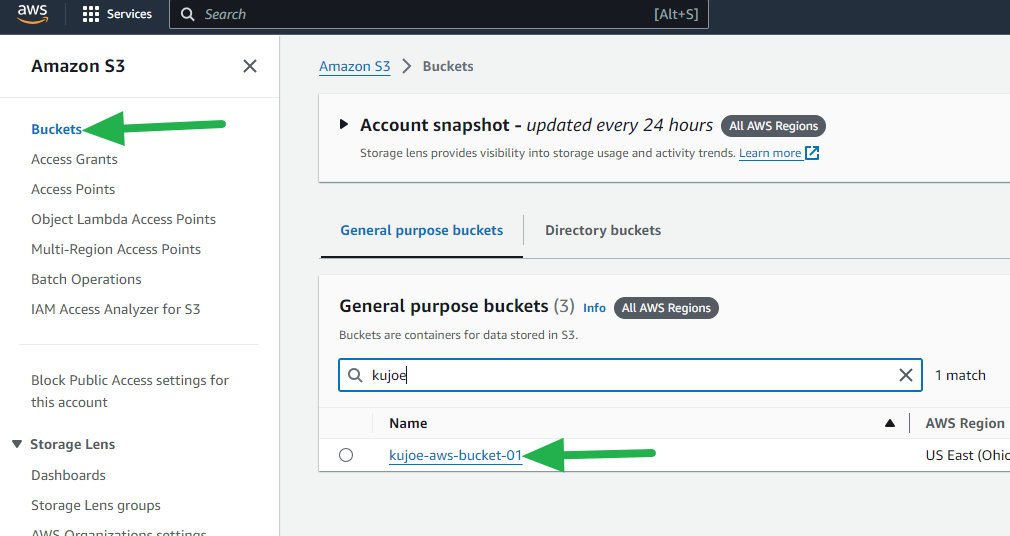

- Login to AWS and navigate to your S3 Buckets and click on the bucket.

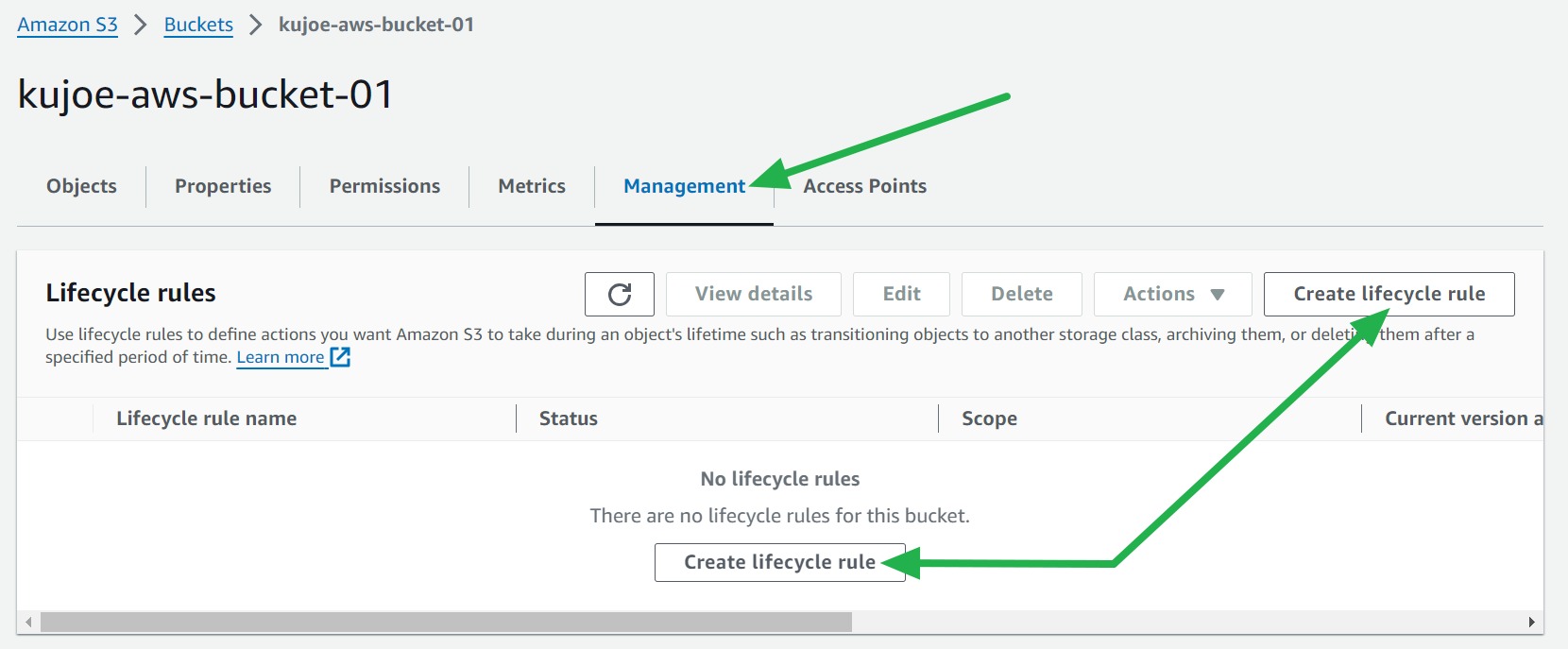

- Select the Management tab and then click on one of the *Create lifecycle rule** buttons.

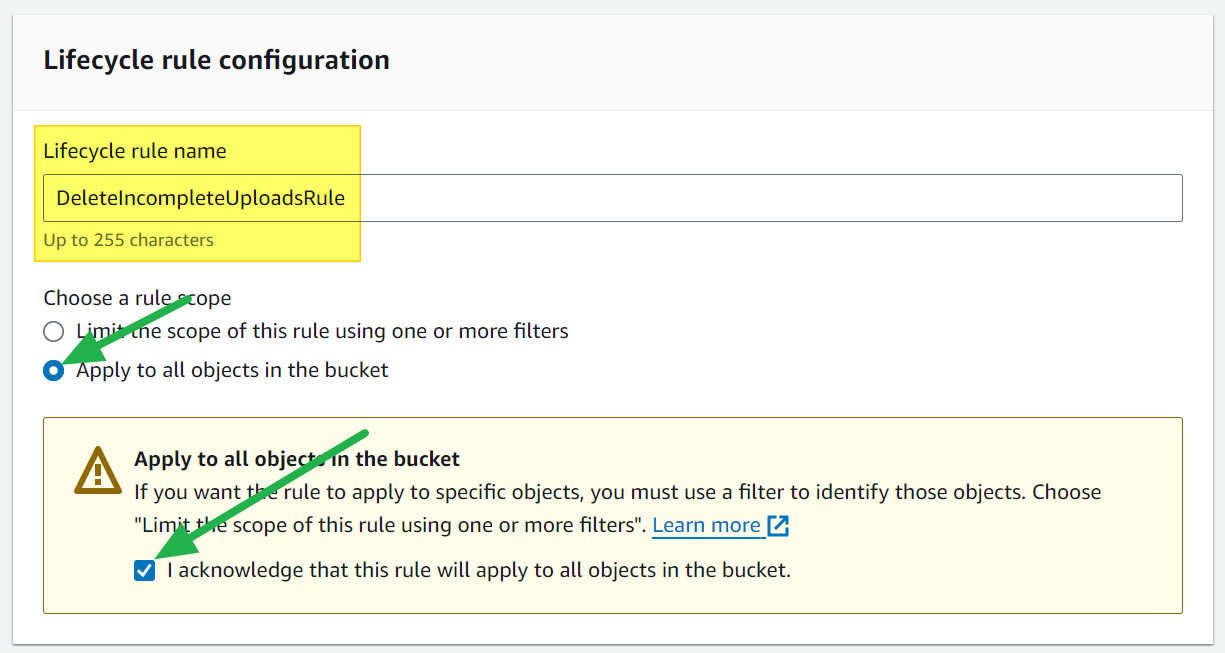

- Enter a name for the rule, select Apply to all objects... and then check the I acknowledge... box.

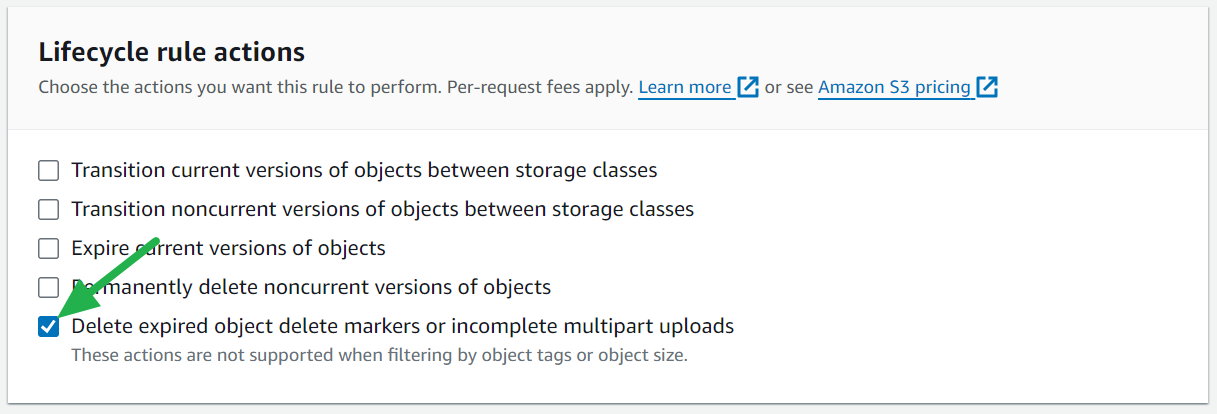

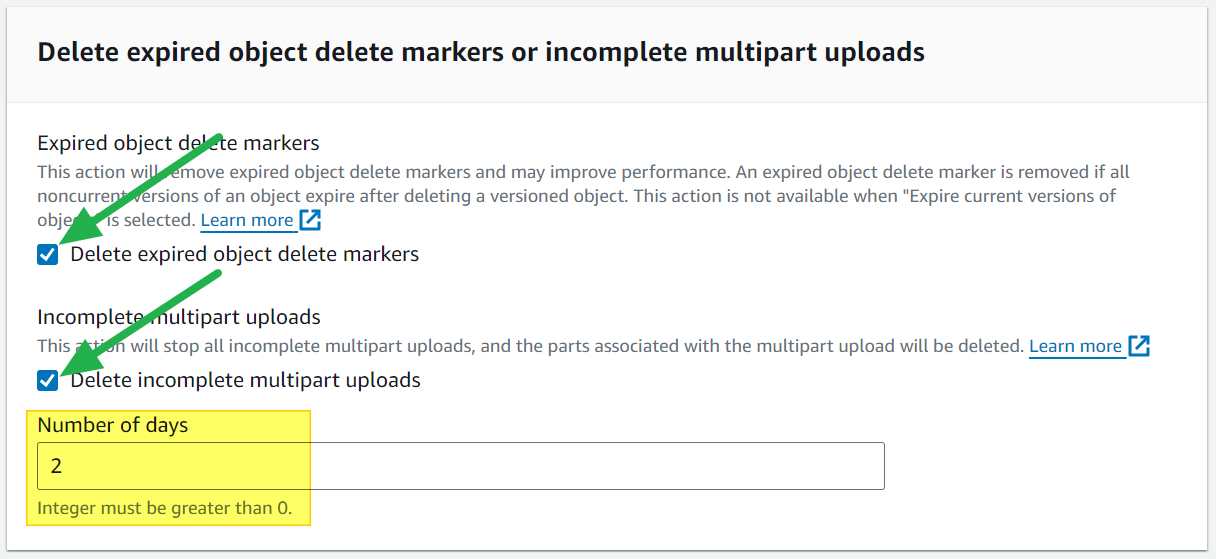

- Check only the Delete expired object delete markers or incomplete multipart uploads box.

- Check the Delete expired... and Delete incomplete... boxes and type the number of days you'd like to keep the broken uploads for.

(I set this to 2 days just in case I had large files that took a while to upload to prevent legitimate files from being cleaned up before the upload finishes.)

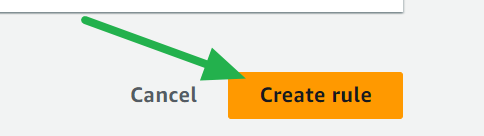

- Click the Create rule button and you're all set!

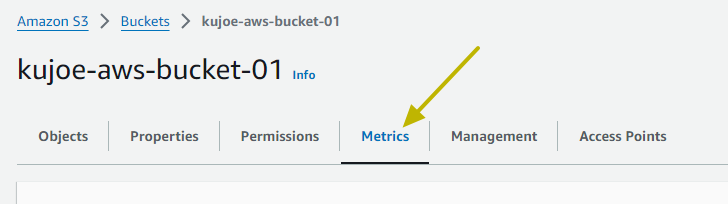

It'll take a day or 2 for the rule to run as it only runs once a day from what I've read, but when it does you can check your stats under the the Metrics tab for the bucket.

After waiting about 36 hours my metrics updated any I went from 18,735 files to 4,169 in a single bucket and about 200GB of staging files were cleaned up while my actual Glacier storage usage stayed the same. Considering a lot of the files are tens of gigabytes in size I'm guessing each file is made up of thousands of parts so one failed upload can leave several hundred files out there. This should make your life (and your wallet) a little better.

Happy Clouding!

Go out and do good things!

-KuJoe